Data Science & Analytics

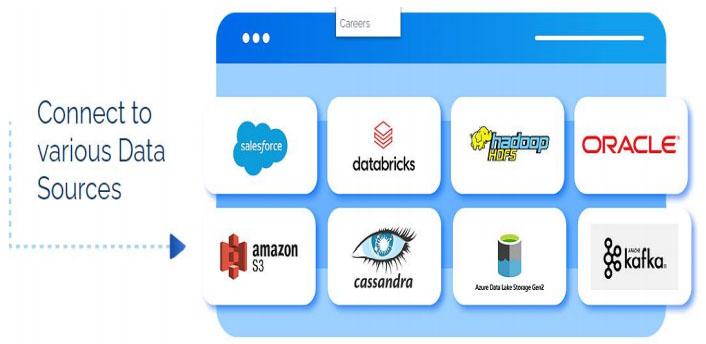

Infobahn is an implementation partner for Sparkflows “www.sparkflows.io”, which is a powerful self-service Data Science and Analytics product built for the enterprise. Seamlessly connect to your data from a wide variety of data stores, clean, enrich and prepare it, and build best-in-class machine learning models on the machine learning library of your choice and deploy them on any of the public clouds.

Sparkflows scales seamlessly from megabytes to petabytes despite being fully extendable for your environment. Add custom processors, time-series feature generation, data cleaning, or machine learning to fit your needs. Seamlessly onboard hundreds of users onto the platform and enable collaboration to build advanced data and machine learning solutions.

Create workflows with 250+ prebuilt processors, or code in with language of your choice – Python, Java, Scala or SQL.

Key Features:

- 250+ Processors running on Apache Spark providing Data Profiling, Machine Learning, ETL, NLP, OCR and Visualization.

- An intelligent Workflow Editor providing Schema Inference, Schema Propagation and Interactive Execution.

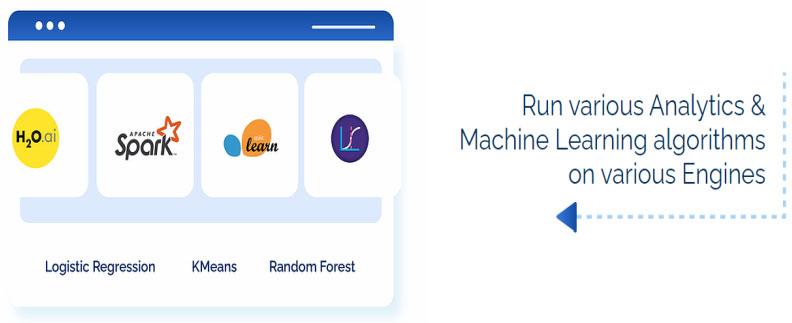

- Machine Learning covering Classification, Clustering, Regression. Complex feature generation using an array of processors.

- Streaming Analytics with Spark Streaming, connectors to Kafka, Flume and Twitter.

- Data Cleaning, Data Profiling and ETL covering Summary Statistics, SQL, Row Filters, Column Filters, Joins etc. Ability to write SQL, Scala, Jython within the workflow.

- Reading and Writing various File Formats including CSV/TSV, Avro, Parquet, JSON, PDF, Images etc.

- Reading and writing from various sources including HIVE, RDBMS/JDBC, HBase, Cassandra, Solr, Elastic Search.

- NLP using OpenNLP and StanfordNLP. OCR using Tesseract.

- Powerful Visualization with Processors and Streaming Dashboards for streaming data.

- Workflow Scheduling.

- Smooth and powerful deployment on the Edge node of an Apache Spark Cluster on-premise or in the Cloud.

- Our solution enables building end-to-end Big Data applications; perform complex Analytics, Machine Learning & Data Pipelines in minutes on Apache Spark.

- It provides Workflow Designer, 250 Processors/Operators, Workflow Execution, Dashboards, Developer integration, REST API’s etc.

- It allows developers to write their own processors and operators and plug them in.

- It has both batch and streaming engines running on Apache Spark and connects to various big data sources (HDFS, HIVE, HBase, Kafka, Elastic Search, S3, Redshift etc.) and seamlessly handles both structured and unstructured data.

Typical Use Cases:

- Self-Serve Big Data Analytics

- Log Analytics

- Entity Resolution

- Machine Learning, NLP, OCR

- Recommendations, Churn Prediction, Sentiment Analysis – Customer 360 degree

- Clickstream Analysis

- Demand Prediction

- Search Optimization, Product Recommendations

- Network Optimization & Analytics

- Marketing/Merchandising/Operation Analytics

Collaborative Self-Serve Advanced

Analytics & Data Science

Connect, combine, manage and understand all

the data from multiple sources on one single platform

View your ML models, compare them, and use them for Predictions

View your data in Charts, Maps etc. Bring them together

onto Report from various executions. Seamlessly build dashboards to

interact with your data.

ML/Al at the Speed of Business

Collaboratively build best-in-class Machine Learning applications in hours using 300+ pre-built drag-and-drop processors in Sparkflows Big Data ML Workbench and make data driven decisions in real time.

Load

Ingest data from wide variety of sources with our 25+ ready to use connectors. Don’t find the one you need, build it yourself or ask us.

Enrich

De-dupe, aggregate. join and clean data with 50+ data processors. Even bring in third-party data to enrich to generate deeper insights.

Feature

Identify features that affect the model or just do unsupervised Learning with just few cLick.

Build Model

Choose the right model, train and test and choose the one that predicts more accurately. Re-train with more data for better results.

Deploy

Deploy on the stack of your choice. Deploy via Docker image or otherwise on any of the cloud providers for ML model deployment.